Innovation in Machine Intelligence

Fully integrating hardware, software, and data

Systems Portfolio

- Autonomous Sanitizing Robot

- Mobile Picking Robot

- Intelligent Drone Vision

- Semantic Segmentation Framework

- Industrial Robot Simulator

- Autonomous Mobility System

- HVAC Ultraviolet Lights

Autonomous Sanitizing Robot

Ensuring a sanitized indoor environment is the top priority as businesses welcome back employees and customers on premises. The autonomous sanitizing robot is capable to sanitize frequently touched surfaces and shared spaces both efficiently and thoroughly. With a visible and continuously self-sanitizing measures in place, people may feel more aware of the company’s efforts to keep them safe, giving them more ease as they navigate the buildings and facilities.

We have designed an affordable artificial intelligence (AI) powered mobile robot for precision sanitization of any indoor area. For any commercial buildings, facilities, offices, restaurants, and schools, our robot can sanitize with a fog spray of the disinfectant solution while navigating around tables, chairs, or fixed objects. Here are some of the key features of this robot.

- Sanitizing fog particle size is below 10 microns to avoid residue on the floor, furniture, or your equipment

- AI machine vision targets sanitizing fog coverage on human touch surfaces of tables, chairs, door handles, window blinds

- AI sensors detect and classify most common objects in an indoor environment with a 94% accuracy

- AI machine learning feature for identifying new objects at your specific workplace location

- Cameras and real-time alert feature allows for sanitization to pause when people enter in the area

- Five gallon disinfectant liquid capacity for coverage area above 40,000 square feet without refill

- Intuitive dashboard app for user to specify ‘no-go’ zones

- Robot fleet management and routing customization through enterprise grade apps

- Extended battery capacity for longer operational time, and autonomous charging station

Autonomous sanitizing robots also reduces exposure for janitorial staff, allowing them to focus on other areas of the office that require more manual cleaning. While there are many short-term fixes to accommodate cleanliness and social distancing in shared spaces, long-term investment to a clean and sanitized facilities can change the employee and customers’ perception of a business. A higher workplace satisfaction among employees also has positive effects on the bottom line, meaning the implementation of an effective sanitization effort can indirectly benefit profitability.

Mobile Picking Robot

Innovative business outcomes can be delivered when automation is powered by artificial intelligence at the core of an intelligent enterprise. The emerging consumer products retail ecosystem can deliver high value use cases connecting digital consumer insights with shopper-centric supply chain. Some of these high return use cases can be brought to life by integrating enterprise systems with autonomous mobility systems. The fully integrated artificial intelligence (AI) system can recognize objects and handle it without human intervention while navigating store fronts, shop floors, fulfillment centers, distribution centers, and warehouses.

Our autonomous picking robot can accurately detect and pick items in any unstructured environment. The robot receives an order to pick several items and navigates itself to the shelf. The robot recognizes the item to pick using machine learning and navigates its positions itself to reach out and grab the item. The robot view of the picking action can be seen on the video above. On the left side of the screen is the machine vision as the robot navigates to pick the item. When the desired object is recognized, the robot extends its arm and picks the item. The right side of the screen is the Lidar map that the robot uses to navigate. The mobile robot brings the item to a transport robot that contains the rest of the order. From here the transport robot delivers the filled bin to the packing station for boxing and shipping. The picking robot returns to pick its next order. The AI mobile picking robot delivers low cost, fast, reliable, mobile picking for structured and unstructured environments including retail, warehouse, and medical applications.

This fully integrated AI system is built by unifying the power of edge semiconductor processors, accelerators, sensor fusion, and trained neural networks. Both deep learning at the cloud and machine learning optimization at the edge are implemented. Industry 4.0 business use cases are defined and deployed with these AI systems. The technical competencies include semantic segmentation neural network, machine vision, sensor fusion with LiDAR, RGB-D camera, IMU, and robotic operating system.

Intelligent Drone Vision

Drone vision AI system was developed and applied for detecting cracks on the roads and streets. Instead of traditional methods of recording camera footage on the cloud first and then analyzing it for cracks, this real time AI system uses advanced image processing techniques and path finding algorithms to determine cracks pixel-by-pixel basis.

The drone camera footage is extracted into frames and pre-processed using a variety of OpenCV image filters such as local mean, gaussian bilateral blurring, and other morphological transformations. Once pre-processed, the image is then processed for minimum cost paths based on gray scaled pixel intensity. This allows cracks or segments of dark discolorations to be connected via paths on a pixel by pixel level. These detections can then be output and overlaid on the original footage to outline detected cracks. These paths are computed using partitioned regions of images, with the pixels of highest intensity in these regions designated as crack points. Then using a minimum cost function or path finding algorithm such as Dijkstra’s, these crack points can be connected to other pixels with similar intensities to determine the path and shape of detected cracks.

Cracks are detected reasonably accurately with much less effort than is typically required using manpower. Typically a 1920x1080 frame takes less than 10 seconds to process. To increase real-time performance, the machine learning algorithms are optimized for NVIDIA Jetson Xavier processor to process the footage on board the drone. The performance has been further increased by parallelizing the required processing using CUDA. The detected cracks is further classified based on desired metrics and used to provide estimation of required repairs, materials, and costs.

Semantic Segmentation Framework

Segmentation is essential for image analysis tasks. Semantic segmentation is image classification at a pixel level. Semantic segmentation describes the process of associating each pixel of an image with a class label. In an image that has many cars, segmentation will label all the objects as car objects. Semantic segmentation is very crucial in self-driving cars and robotics because it is important for the models to understand the context in the environment in which they are operating.

The steps for training a semantic segmentation network are as follows:

- Analyze training data for semantic segmentation

- Create a semantic segmentation network

- Train a semantic segmentation network

- Evaluate and inspect the results of semantic segmentation

Applications for semantic segmentation include:

- Autonomous driving

- Industrial inspection

- Classification of terrain visible in satellite imagery

- Medical imaging analysis

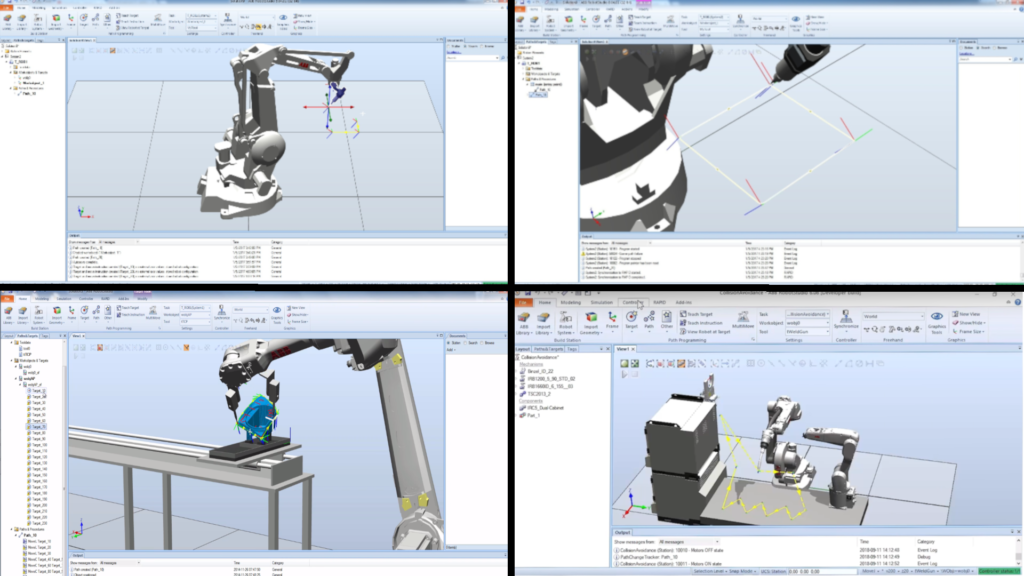

Industrial Robot Simulator

Human arms have been putting together items in factories for years. Now, new arms, industrial robotic arms, are working in that same respect, at a faster, more accurate rate. In the manufacturing world, one of the most common robots is the robotic arm. This metal arm, usually made up of four to six joints, can be used for several manufacturing applications, including welding, material handling, and material removal. An industrial robot arm is a marvel of engineering in that it reacts similarly to our own arms. The robot arm closely resembles a human arm, with a wrist, forearm, elbow, and shoulder. The six-axis robot has six degrees of freedom, allowing it to move six different ways, unlike the human arm, which has seven degrees of freedom. However, industrial robotic arms move much faster than human arms and, without the proper safety measures, can be dangerous. Therefore, the AI system competency is to design and implement an application to simulate robot arm tasks to reduce risk and increase productivity.

The robot arm simulation process can be separated into four main parts: creating work objects, importing models of a robot arm, tool object, and work object, planning the motion paths and running the simulation. Firstly, work objects are needed to be created using SolidWorks, a tool for creating 3D models. After creating work objects, they can be exported as a file and it can be used as an object in the simulation environment. Then, RobotStudio is used to do all the simulation tasks. The first step of the simulation is to import models of the robot arm, tool object, and work objects. Different robot arm model has different specifications such as move range and degrees of freedom. Therefore, the virtual robot arm model should match the physical robot arm. Then, a tool object will be imported as a tool used by the robot arm. Then, work objects, which will are the 3D models exported from SolidWorks, will be imported. The next step is to plan the motion paths. Lastly, simulation can be executed along the paths. After the simulation is well tested, the task can be deployed to a real robot arm.

The technical competencies include software tools such as SolidWorks and RobotStudio. In conclusion, By using such simulation platform, risk reduction, quicker start-up, shorter change-over, and increased productivity are archived.

Autonomous Mobility System

As factories and manufacturing plants become more and more advanced and automated, the task of moving people and goods around becomes exceedingly challenging. The need for a robust autonomous navigation unit which can carry payloads in and around an environment like this is rising rapidly. This project presents a different approach to solving this problem – using automotive grade sensors with an industrial certified robot base which is controlled by a proven software stack used in autonomous automobiles all running on a small, cost-effective and power-efficient edge-compute device – in this case the NVIDIA Jetson TX2.

Autonomous navigation consists of four main parts:

- Mapping the environment

- Localization to determine position of the unit in the environment

- Global Path Planning to determine the best way to get to the goal

- Local Path Planning to avoid any immediate obstacles

The main application of this autonomous mobility system was for warehouses and manufacturing plants. We operated under the assumption that the system will be always operating in a GPS-denied environment. This led us to explore open source navigation options which mainly relied on LiDAR as the means of localization. The framework chosen for navigation capabilities was Autoware – an open source software originally made in the Computing Platforms Federated Laboratory at Nagoya University, Japan and later maintained by Tier IV.

The automotive grade sensors are chosen such as Velodyne LiDAR, Leopard Imaging 4K cameras, and Bosch IMUs. The system was finally integrated with a Ubiquity Robotics Magni base for actuation.

Applications:

- Autonomous transportation of goods and personnel in manufacturing plants

- Flexible conveyor systems

- Warehouse and inventory management

- Retail shop floors

- Outdoor navigation in geo-fenced area

HVAC Ultraviolet Lights

In addition to stressing the importance of social distancing and handwashing to fight COVID-19, businesses can improve air quality by properly installing and maintaining existing air filtration systems. Approximately 75% of the air in the office is recirculated and filtered indoor air. As a result, air filtration systems and humidity controls are top of mind for building management and companies.

We provide wireless sensors which are integrated with existing Heating Venting and Cooling (HVAC) system to track effective functioning of UV lights installation in ducts. These control sensors on HVAC is also used by our autonomous sanitizing robots to turn off the HVAC automatically when these robots are dispatched to the workplaces and turns on the HVAC when robots completed the routine sanitization. We facilitate the compliance with American HVAC engineers and CDC guidelines and recommend system upgrades include supplying clean air to susceptible occupants, containing contaminated air and/or exhausting it outdoors, diluting the air in space with clean and filtered outdoor air, and cleaning the air within a room.

The Wholistic AI Platform

The wholistic artificial intelligence (AI) platform unifies the power of people, machines, and the latest advances in machine learning, sensor fusion, and semiconductor processors. This new AI system architecture is transforming every industry, from automotive to retail. It requires an extensive infrastructure of high performance computing, and connectivity. It is capable to recognize the broader context that it is operating within and respond accordingly, making autonomous decisions or providing data-driven recommendations to users. The combination of contextual perception and sensor fusion is paving the way for machines to learn and engage with real time intelligence capabilities – all in a way that works seamlessly together and intuitively for all industry stakeholders.

We are recognized for addressing artificial intelligence (AI) holistically across multiple industry use cases. It means equipping enterprises and machines with full systems, not components, with new AI centric capabilities. Both hardware and software combined is recognized as a single product architecture in its own right. We implement an adaptive testing framework for AI systems to self-correct faults and ensure reliability under various unanticipated circumstances. Advanced machine learning techniques in the form of evolutionary algorithms and reinforcement learning are applied to learn the minimal number of faults that cause minimal or failed behavior of an autonomous system which is under the control of autonomy software.