Innovation in Augmented Intelligence

Symphony of humans and AI working together

Systems Portfolio

- Collaborative Intelligence Space

- Interactive Care Pathways

- Personalized Wellness Assistant

- Voice Assisted Appliances

- Visual SLAM Accelerator

- Collaborative Robots System

- Edge Intelligence System

Collaborative Intelligence Space

Collaborative intelligence is where the future of work lies. It has come with a new realm of possibilities applying artificial intelligence (AI) to unlock better decision making and facilitate better outcomes. For an enterprise to survive in today's constantly evolving marketplace, there is a heightened need to embrace collaborative intelligence. To take full benefit of collaboration, organizations need to learn how humans can most effectively work together with computer systems and intelligent machines and how to re-build business processes to support the collaboration. This will help provide you with the right information and insights you need to quickly make decisions to have more time and headspace for productive, meaningful tasks. When human workers and artificial intelligence work together, it will make administrative tasks less tedious and decision making easier.

We have launched icuro.ai platform where humans and artificial intelligence collaboration is meant to actively facilitate each other’s complementary strengths. It consists of the following key features.

- Face recognition for sign in

- Enterprise code editors

- Live enterprise lab access

- White boards and live streams

- Conversational scribing and emailing

- Video and audio productivity tools

The possibilities afforded by collaborative intelligence are enormous and these are now really driving to shape the future of work in numerous ways. This platform can be quickly custom implemented for each and every organization meeting the enterprise security standards and guidelines.

Interactive Care Pathways

Collaborative intelligence is where the future of work lies. It has come with a new realm of possibilities applying artificial intelligence (AI) to unlock better decision making and facilitate better outcomes. For an enterprise to survive in today's constantly evolving marketplace, there is a heightened need to embrace collaborative intelligence. To take full benefit of collaboration, organizations need to learn how humans can most effectively work together with computer systems and intelligent machines and how to re-build business processes to support the collaboration. This will help provide you with the right information and insights you need to quickly make decisions to have more time and headspace for productive, meaningful tasks. When human workers and artificial intelligence work together, it will make administrative tasks less tedious and decision making easier.

We have launched icuro.ai platform where humans and artificial intelligence collaboration is meant to actively facilitate each other’s complementary strengths. It consists of the following key features.

- Face recognition for sign in

- Enterprise code editors

- Live enterprise lab access

- White boards and live streams

- Conversational scribing and emailing

- Video and audio productivity tools

The possibilities afforded by collaborative intelligence are enormous and these are now really driving to shape the future of work in numerous ways. This platform can be quickly custom implemented for each and every organization meeting the enterprise security standards and guidelines.

The automotive grade sensors are chosen such as Velodyne LiDAR, Leopard Imaging 4K cameras, and Bosch IMUs. The system was finally integrated with a Ubiquity Robotics Magni base for actuation.

Applications:

- Autonomous transportation of goods and personnel in manufacturing plants

- Flexible conveyor systems

- Warehouse and inventory management

- Retail shop floors

- Outdoor navigation in geo-fenced area

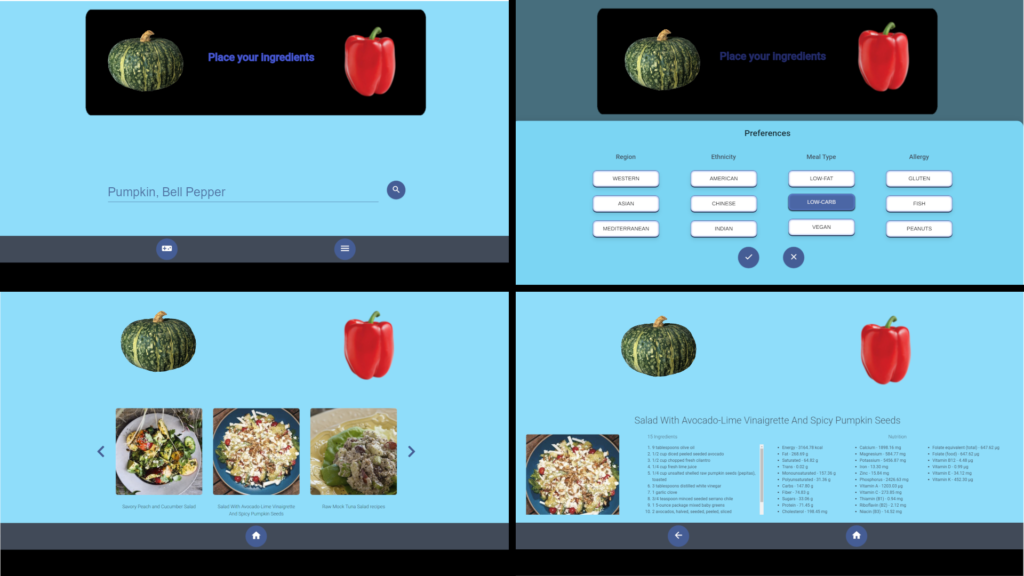

Personalized Wellness Assistant

Intelligent appliances with multimedia capability have been emerging into our daily life. Our home is one of the most prominent environments where intelligent appliances and smart wearables are inter-connected to deliver personalized healthy choices. The modern lifestyle isn't allowing people to spend time on cooking healthy food at home and develop personalized wellness plans. The AI powered personalized wellness system is architected to delivering an immersive experience for an enjoyable and healthy lifestyle.

The system projects an user-friendly screen on any surface or table and turn the surface into a touch screen by using advanced image processing techniques. It assists users to choose the diet preferences based on personalized needs such as low-fat, gluten-free, etc. The system detects any ingredients placed on the table and recommends recipes based on ingredients and diet preferences in real time. The system integrates with smart wearables to provide a comprehensive view of personalized wellness profile including calories, weight, and other parameters for sustainable healthy benefits.

This fully integrated AI edge system is implemented with deep learning at the cloud and applied pruning techniques at the edge to perform rapid model training and real-time inference. A Nvidia Xavier, one of the world's fastest AI-at-the-edge processors is used for the system deployment to realize GPU accelerated image processing computation and machine learning.

The technical competencies include software tools such as Tensorflow, Python, Flask, OpenCV, Numpy, Javascript, ReactJS, JQuery, Electron, WebRTC, and AWS SageMaker instance. The hardware stack includes Nvidia Xavier processor, an RGB-Depth camera, and a projector. AWS SageMaker instance is used for cloud deep neural network model training. The instance specification includes 8 vCPU, an Nvidia Tesla V100 GPU, and 61GB memory.

Voice Assisted Appliances

When returning to work, kitchen and break room soon will be the high traffic-areas during lunch time. The voices assisted appliances will help employees feel more safe to eat their meals as the appliances will require less cleaning with harsh chemicals which will prevent contamination to meals. The main advantage with voice activated appliances is that they use one of the most natural of human behaviors to command, interact, and monitor your appliances. Speaking is something almost everyone can do, and it requires no learning curve.

Our systems integrate existing appliances with voice assistants such as Amazon Alexa, Google Assistant, or Apple Siri to control appliances with just your voice. These appliances can range from coffee maker, water dispenser, microwave, etc.

Visual SLAM Accelerator

In augmented intelligence, a common business problem is how to make a machine or robot move around and perform tasks. For this purpose, the machine or robot needs to have an idea of its location in the environment that it is in. This problem is resolved by using simultaneous localization and mapping (SLAM). SLAM is a computational problem for constructing a map of an unknown area while still knowing where the machine or robot exists in the map.

There are various algorithms done to tackle this problem. But in a general sense, a sensor such as LiDAR or camera is used and data from these sensors are stitched together to form a map known as mapping process. The same sensor afterward can be used to figure out where it is on the map by performing a suitable matching algorithm know as localization. After performing the two, the machine or robot will have a sense of where it is in a local space. In this particular instance, because we are using a camera to perform SLAM, it is known as Visual SLAM or VSLAM..

Once the machine or robot has knowledge of its location, navigation and other tasks become accessible. Like SLAM, navigation has many different algorithms for path planning. By choosing from a range of algorithms for following waypoints to others like A*, the machine can move around the map.

We have chosen one of the open source SLAM algorithms and applied it for creating a map used in many augmented intelligence applications. By using one RGB-Depth camera, it is capable of creating a dense pointcloud of an area. This pointcloud can be used in various applications such as surveillance robots, exploration of unknown places, and terrain mapping.

By applying Radeon Open Compute(ROCm) and AMD MIVisionX, we delivered this framework to effectively use OpenVX for feature detection and OpenCL for GPU acceleration.

Applications:

- Creating 3D models of real space

- Creating 3D models for virtual reality

- Creating 3D models of game environments

- Perception for machine, robot, and drone applications

Collaborative Robots System

Robots are getting smarter, safer, and easier to use due to advances in their components. The robotics industry introduced the collaborative robots equipped with intelligent features to work in close proximity to human counterparts. These intelligent robots include sophisticated motion control, motors, edge processors, sensors; all integrated with AI system. Rather than replacing humans, these collaborative robots would simply help them do their job more efficiently, and with more power. By bringing together collaborative robots and enterprise business processes for the first time, we demonstrate the strength of innovation in delivering intelligent augmented services in multiple industries.

Our hands-on technical capabilities in deploying collaborative robots are as follows:

- Development of the smart connected robot system

- The key components behind robot and human interfaces

- Configuration systems that train collaborative robots

- Robot intelligence that offers flexibility

- Enabling custom features and enhancing robot capabilities

Pepper is the world’s first social humanoid robot to recognize faces and basic human emotions. Pepper is optimized for human interactions and able to engage with people through conversations. We have deployed natural language processing techniques using Google DialogFlow for digital health and retail industry use cases. It allows enterprise workers to rely on Pepper to carry out repetitive tasks, so they can concentrate on their main tasks.

Edge Intelligence System

In the AI driven era, augmented intelligence is being used to describe how AI is going to interact with people; not through replacing them, but through improving what they already know. While the system can learn without human intervention, augmenting it with the user’s decision-making process and capabilities by providing deep insights which are otherwise hidden or inaccessible. This level of intelligence from machines learning from human interaction creates even a powerful set of actionable intelligence for businesses to act upon.

The AI system competency development is to design and deploy augmented intelligence capabilities into products and services. The key competencies include autonomous system architecture for reinforcement learning neural network, edge computing processors, sensor fusion, and natural language processing. It begins with developing and deploying an autonomous edge stack for mobility, machines, and equipments that interact easily with humans in multiple industry domains.

The Edge AI Platform

The edge artificial intelligence (AI) platform unlocks the business value of sensor data to deliver actionable intelligence at the edge of network, near the source of data. This edge AI computing architecture involve a large number of independent nodes that can autonomously execute business logic and computations, including security analytics, without a centralized authority. It acquires and analyzes real time data from senors and Internet of Things (IoT) based products and integrates sophisticated technologies such as advanced machine learning, security, and mobility on an edge computing processor. This reduces the communication bandwidth required between sensors and the central data center by performing advanced machine learning algorithms and intelligence generation at or near the source of data.

We deliver hardware and software platform that enables intelligence at the edge of the IoT while maintaining built-in support for cloud connectivity, services, and applications for industrial IoT, digital health, smart farming, and smart buildings. We have skillfully chosen the right sensors, devices, and machines to operate in a secured network. With a properly planned computing device and architecture, less data has to travel the network resulting in less bottlenecks freeing up the infrastructure. It includes the implementation of secure hash algorithms to enhance security of the edge network. The edge solution stack can be configured locally, with no intervention from the cloud but also knows when to use the cloud. We offer proven edge AI stack and tools for multiple use cases that allow enterprises to exploit and deploy the power and possibilities of the edge intelligence solutions.